Here’s an overview of some of the key updates for different platforms and the Editor. For full details, check out the release notes.

Developed in partnership with Google’s Android Gaming and Graphics team, Optimized Frame Pacing for Android provides consistent frame rates and smoother gameplay experience by enabling frames to be distributed with less variance.

As a developer for mobile, you will also benefit from the improved OpenGL support. We have added OpenGL multithreading (iOS) to improve performance on low-end iOS devices that don’t support Metal (approximately 25% of iOS devices that run Unity games).

We have added OpenGL support for SRP batcher, for both iOS and Android, to improve CPU performance in projects that use the Lightweight Render Pipeline (LWRP).

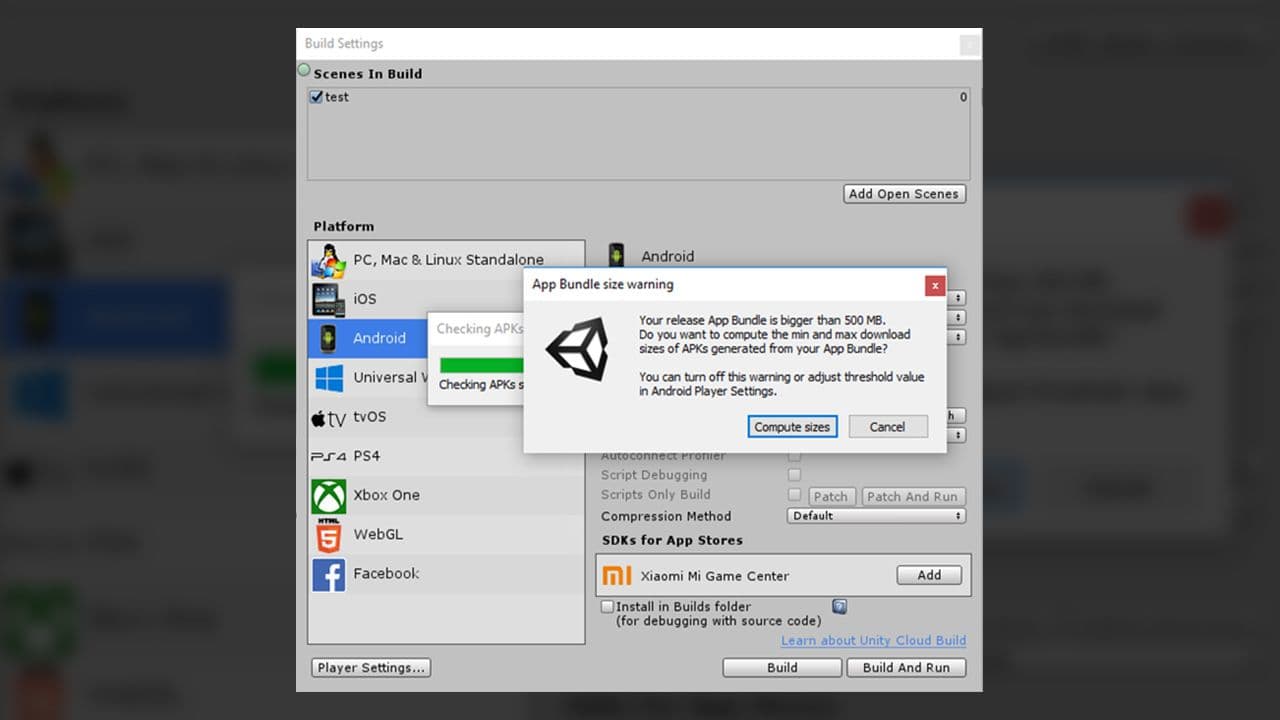

This new function makes it easier to know the final application size for different targets.

We continue to make the Editor leaner and more modular by making several existing features available as packages, including Unity UI, 2D Sprite Editor, and 2D Tilemap Editor. You can integrate, upgrade or remove them via the Package Manager.

This revamped system for your target platforms helps streamline the development workflow. It’s in early Preview and we are looking for users to try out the new workflow and give us feedback.

We have included support for face-tracking, 2D image-tracking, 3D object-tracking, environment probes and more. They are all in Preview.

HDRP now includes support for your VR projects (in Preview). This support is currently limited to Windows 10 and Direct3D11 devices, and must use Single Pass Stereo rendering for VR in HDRP.

Vuforia support has been migrated from Player Settings to the Package Manager, giving you access to Vuforia Engine 8.3, the latest version.